AI Computing As A Service

AI is becoming increasingly important in almost every business, with progressively more powerful models, including very efficient, cheap, and open-source ones like DeepSeek.

However, this need for computation linked to AI can be highly variable, making it rational for most users to depend on cloud services to provide it instead of directly owning the required chips and data centers. The same can be true for other compute-heavy tasks, like special effects generations.

One solution to access such cloud services is to rely on the big cloud providers, like Amazon’s AWS (AMZN -2.02%), Google Cloud (GOOGL -0.9%), or Microsoft’s Azure(MSFT -0.69%).

Another option is to go directly to an AI-focused cloud provider, with infrastructure entirely dedicated to the type of computing hardware required by AI calculations.

One of the largest providers of this kind is CoreWeave, which has been piling up GPUs since 2017. CoreWeave is now looking at filing for an IPO raising $4B, which would bring its valuation to $35B.

So investors might want to know more about the company and how it is positioned to benefit from the AI boom, even while incertitude piles up on the future of the industry regarding the computation power truly needed.

History of CoreWeave

CoreWeave is a newcomer in the cloud computing industry, having been founded in 2017. It initially specialized in computing for the cryptocurrency industry with GPUs (Graphics Processing Unit).

GPUs are initially chips designed for graphic calculation, often for 3D video games. They are dedicated to performing thousands of simple calculations in parallel, instead of a few complex ones at a time, like processors (CPUs).

As it turned out, GPUs were a perfect design for the type of calculation required for cryptocurrency mining, and also for AI using neural networks, resulting in explosive growth and leadership in AI hardware for GPU specialist Nvidia (NVDA -7.18%).

(You can read more about the investment case for Nvidia in our dedicated report).

Then, in 2019, it moved on to a more generalized cloud offer, still specialized in GPU-based calculation.

The early acquisition of a massive amount of GPUs by CoreWeave made it a key partner for AI startups looking for extra computing power.

“People were still able to access GPUs last year, but when it became extremely tight, all of a sudden it was like, where do we get these things?

AI companies that were using CoreWeave spread the word to VCs, he added, who suddenly saw a gold mine: “They said, ‘Why aren’t we speaking to these guys’?”

Brannin McBee – CoreWeave co-founder & chief strategy officer

Being at the right place at the time gave access to plenty of capital for growth to CoreWeave, with multiple fundraising rounds, up to a $1.1B one in 2024.

“We believe CoreWeave has emerged as a key leader in building the mission-critical infrastructure foundation required to satisfy society’s current and future demand for high-performance compute at scale to power the generative AI revolution.”

Philippe Laffont, Founder & Portfolio Manager of CoreWeave.

CoreWeave Overview

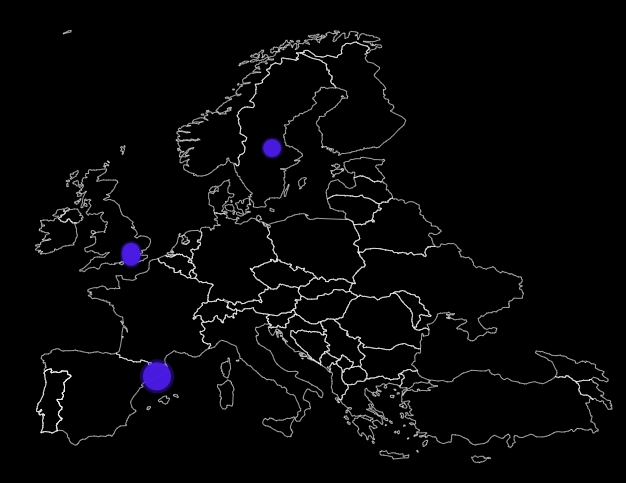

So far, the company has been mostly building its infrastructure in the US, with North America hosting most of its servers, and 3 installed in Europe.

Source: CoreWeave

Source: CoreWeave

CoreWeave constantly monitors the “health” of its equipment, identifying potential issues proactively and reducing the risk of downtime or underperformance of its servers.

In addition to the computing capacities, CoreWeave provides a high level of security to its servers, a critical question considering the question of data privacy and safety that surrounds AI, as well as the intense competition from US and overseas firms.

While technically a competitor to Big Tech firms, CoreWeave can also be seen by them as a valued partner, with Microsoft, notably, being the company’s biggest customer.

In 2024, CoreWeave made around $2B in revenue and has a very aggressive growth target of $8B for 2025.

Source: CoreWeave

A key center of AI cloud services provided by CoreWeave is flexibility. It can rapidly ramp up the available computing power for a company growing quickly or alternatively wind it down if a project is over. By having hundreds of different clients, CoreWeave can optimize to the maximum utilization of its precious GPUs.

“Two months ago, a company may not have existed, and now they may have $500 million of venture capital funding. And the most important thing for them to do is secure access to compute; they can’t launch their product or launch their business until they have it,”

Brian Venturo – CoreWeave CTO

CoreWeave Advantages

Cost Advantages

In most industries, it usually pays off to be somewhat specialized. This gives the company some extra level of expertise and helps it offer its products or services at the cheapest price.

This seems to be the case for CoreWeave, as it claims to provide its service up to 35x faster and 80% less expensive than legacy providers.

While these numbers might reflect some cherry-picking of the competitors to whom it is compared, it seems to be overall true that CoreWeave is cheaper than most of the industry.

For example, the extensive database for cloud provider Digital Ocean indicates that the cheapest GPU provider is CoreWeave, with a price of $0.35/h for access to Quadro RTX 4000 GPUs.

Source: CoreWeave

It also seems that CoreWeave can compete on price with giant Oracle (ORCL -0.93%), which has aggressively tried to reduce cloud GPU prices to grab market shares with startups.

(Read more about this in our dedicated report “Oracle (ORCL): Database Giant, Stargate, and DeepSeek”)

This dedication to providing GPU and nothing else gives CoreWeave a strong expertise in maximizing the utilization of the chips, reducing the cost per use.

When the Big Three are building a cloud region, they’re building to serve the hundreds of thousands or millions of what I would call generic use cases for their user base, and in those regions they may only have a small portion of capacity peeled off for GPU compute

Brian Venturo – CoreWeave CTO

Cost advantage is also achieved by having an early provider of cloud GPU services, which gave the company the required scale to reduce the cost per unit of compute of overhead, R&D, etc.

Technical Expertise

As a specialist, CoreWeave is also able to provide customized data centers for AI companies. So when an AI company is looking to build a very specific type of model, CoreWeave can also build a bespoke infrastructure specifically matching this need.

This expertise also gets relayed in how to build generalist GPU-focused data centers, leading to optimization in manpower required, energy consumed, cooling systems, etc.

This also takes away from the clients the need to optimize the hardware interface, GPUs’ health, etc, and instead concentrate on their core competency.

This is why top AI companies are partnering with CoreWeave, like Inflection AI with a $1.3B GPU cluster paid by a fresh funding round.

“They called us and said, ‘Guys, we need you to build one of the most high-performance supercomputers on the planet to support our AI company.

They call us and they say, ‘This is what we’re looking for, can you do it?’ — and I think we have two of the top five supercomputers that we are building right now in terms of FLOPS.”

Brannin McBee- CoreWeave co-founder & chief strategy officer

Symbiosis With Nvidia

While also providing some other chips, most of the CoreWeave computation power is based on Nvidia chips. GPUs have been in short supply since the crypto boom and then the AI boom, with semiconductor foundries struggling to keep up with demand.

This has led to a situation where companies like Nvidia can somewhat pick the AI winner by simply delivering quicker or slower to some of its clients.

Nvidia has been rather generous with CoreWeave, often giving it a forefront spot in GPU delivery over cloud leaders like AWS. This is because AWS, as well as most of the other large cloud providers, is looking to reduce its dependency on Nvidia by developing its own AI chips.

In the long run, this might be an issue for Nvidia, as many of its current clients are looking at the company’s fat margins and thinking they could instead keep the money through vertical integration. However, this turns into an advantage for CoreWeave, as it means the company focuses on only building a data center, making it a perfect partner for Nvidia.

“It certainly isn’t a disadvantage to not be building our own chips. I would imagine that that certainly helps us in our constant effort to get more GPUs from Nvidia at the expense of our peers.”

Brannin McBee- CoreWeave co-founder & chief strategy officer

As a result, this creates an image of CoreWeave as a company that can deliver on its promises and the needed computation when larger cloud providers struggle to keep up, while CoreWeave has access to many of Nvidia’s latest GPUs, including the H100 & H200.

Source: CoreWeave

The Super GPU Era

More recently, CoreWeave became the first cloud provider with generally available Nvidia gb200 nvl72 instances. Nvidia GB200 NVL72 is designed to act as a single massive GPU, making it a lot more powerful than even the previously record-breaking H100 model. This should also be a lot more energy efficient, a crucial point as the AI industry might fall short on energy before being short on chips at the speed at which AI data centers are built.

Source: Nvidia

This is important, as the design of just piling more GPUs on top of each other to build an AI data center is becoming obsolete.

“In data centers today, you can’t fit the same number of NVIDIA H100 servers or GB200 Superchip compute trays in a cabinet as you could earlier GPU generations because of the higher power and cooling demands.

The improved thermal efficiency and power savings allow us to be more generous with how many GPUs we can fit into a rack—which means more GPUs for our customers.”

CoreWeave Future

How Much Compute?

The open, multi-trillion-dollar question in the tech industry is the future of AI.

On one hand, it is clear that the technology is progressing faster than most anticipated. This should, in theory, be ideal for companies like CoreWeave, which will naturally grow at least as fast as the whole AI industry. And likely quicker, as hyper-scalers and cloud providers will be among the largest beneficiaries of AI, which will become omnipresent enough for every company to use it in one form or another.

On the other hand, it is not clear what is actually the computing power required for AI applications in the long term. The industry is still trying to factor in the revelation that China’s DeepSeek can offer similar results for 1/25th of the price of American OpenAI.

Until now, the assumption was that better models would always be more greedy for computing power, to the point that raw electrical power availability has become a concern and that big tech companies rushed to fight over nuclear power plant future supply.

DeepSeek has severely shaken this idea. What if the future of AI is a lot of small, ultra-efficient open-source AIs instead of centralized applications in gigawatt data centers?

If this is the future of AI, it would be a severe threat to companies like OpenAI, built on the assumption that their computing advantage would be sufficient to create a business moat.

However, this could hardly hurt the long-term prospects of CoreWeave. If AI becomes that cheap to train and use, it means that virtually every company will want its own AI models for their customer services, maintenance management, HR, sales, marketing, etc. Most of these companies will not want to manage their own hardware to run these AIs. A model-agnostic, low-cost provider like CoreWeave is likely to win over Big Tech cloud suppliers more likely to be suspected of pushing for their solutions and AI.

Which GPUs?

So far, CoreWeave has mostly relied on chips from the industry leader, Nvidia. With the adoption of the GB200, this will not change any time soon.

In the long term, it could, however, be that some companies will manage to replicate Nvidia’s prowess in parallel computing and GPU-like technology. If that’s the case, CoreWeave will be able to slowly move over to such a new provider if it needs to.

The same can be said if a new radical way of doing AI calculation emerges. CoreWeave’s scale and network of AI-focused clients would make it a prime partner for the deployment of this new technology.

This is especially true for the same reason that made it a key partner of Nvidia: CoreWeave will never try to replicate such an AI chip internally (unlike Google, Microsoft, Meta, AWS, etc.), and can instead help it deploy as fast as possible.

(You can read more about the potential future GPUs for AI applications in this article from DigitalOcean)

Crypto

Another potential for CoreWeave in the future is in regard to cryptocurrency. While the company has, to a large extent, pivoted to the even larger AI market, this is still an activity requiring a lot of computational power.

As Donald Trump is planning a national cryptocurrency reserve, holding Bitcoin, ether, XRP, Solana, and Cardano, it is clear that cryptos might become an important part of the financial and payment ecosystem of the USA.

And the more official cryptocurrencies become, the more likely they are to rely on safe and known infrastructure providers like CoreWeave.

So we could expect that this will be a non-insignificant part of the company’s business in the future as well.

Conclusion

CoreWeave is not really so much an AI as it is a service provider to an industry growing explosively. This means that it is a lot less exposed to the vagary of having to choose the right type of neural network technology, method of training, etc, than most AI companies.

Instead, as long as it secures access to the latest and most powerful GPU, it is likely to stay a partner of choice for every startup working on AI.

The arrival of powerful open-source models like DeepSeek is potentially a strong boost for CoreWeave’s business, even if it reduces the compute intensity of the industry as a whole. This is because it opens the way for many more competitors to OpenAI by reducing the VC money required to start.

Such smaller and more nimble startups are not going to build their own AI data center & infrastructure but will rely on cloud providers. The same will be true for larger enterprises looking to develop their own proprietary AIs and unwilling to have it hosted on the server of companies that might be interested in their secret data.

So, with an IPO ahead, CoreWeave could be a way for investors to bet on the whole AI industry while not having to understand the subtleties of different AI models and technology.