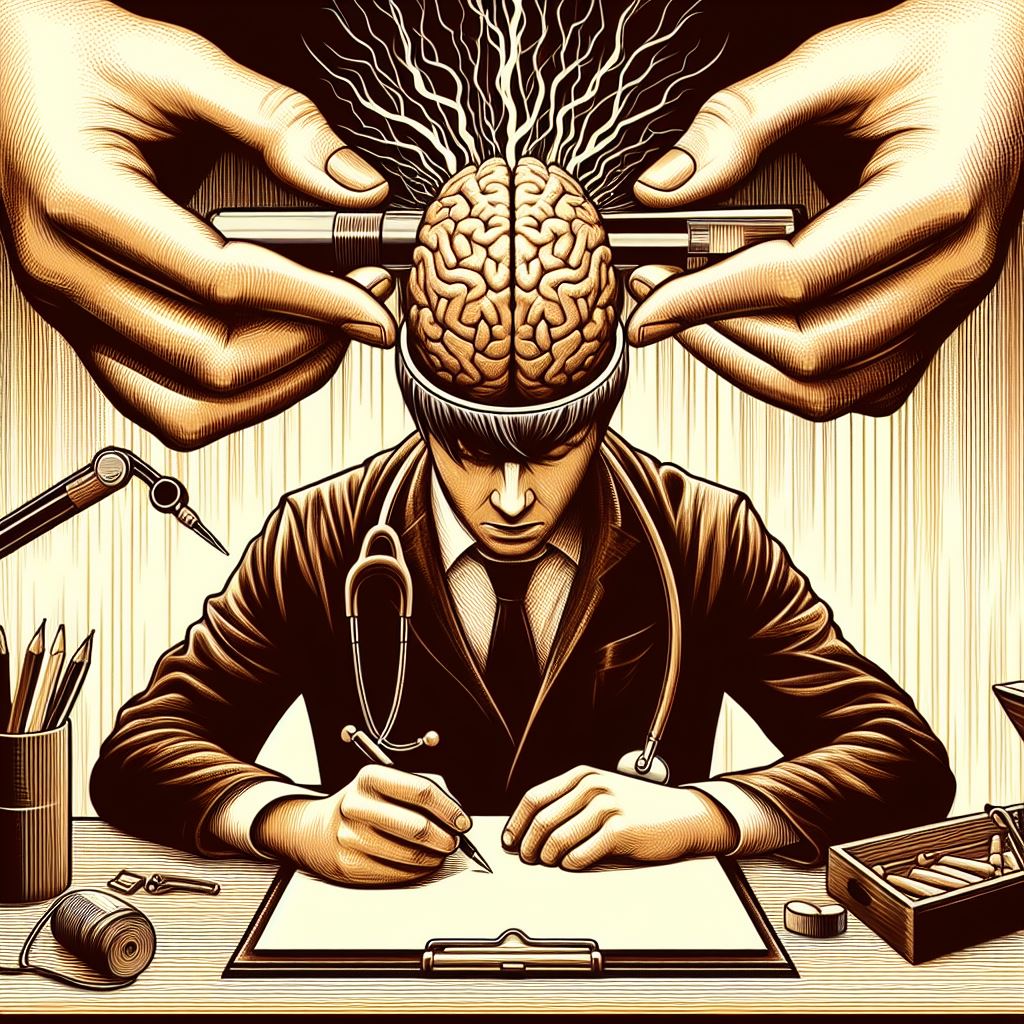

In a bizarre turn of events, a captivating tale about the supposed suicide of Israeli Prime Minister Benjamin Netanyahu’s psychiatrist has flooded the online sphere, igniting a storm of controversy. The AI-generated news story, which initially surfaced in 2010, resurfaced this week, coinciding with heightened tensions in the Middle East due to the Israeli invasion of Gaza. The suicide note allegedly left by the therapist accuses Netanyahu of draining the life out of him, adding a sinister layer to an already complex geopolitical landscape.

In light of the ongoing situation in Gaza and the profound human crisis attributed to Israel, divergent opinions persist regarding the veracity of recent news reports, despite assertions from numerous sources that the information originated from an antiquated satirical blog. The recent surge in social media shares and discussions surrounding the suicide note has intensified scrutiny.

Amidst this discourse, Dr. Moshe Yatom emerges as a prominent figure—a revered psychiatrist renowned for his extensive contributions to the field of mental health in Israel. Dr. Yatom has garnered widespread respect in Israeli community for his unwavering commitment to aiding countless individuals struggling with mental illness throughout the years.

AI-generated news and misinformation wave

As tensions in the Middle East escalate, a concerning trend emerges — the proliferation of AI-generated stories on the internet. These stories, seemingly indistinguishable from genuine news, are disseminated across numerous websites, amplifying misinformation on a global scale. This new breed of AI-powered outlets mimicking established news sources poses a serious threat to the integrity of information, particularly in critical times. While misinformation has long been a tool of warfare, its potency is now magnified by AI, reshaping the landscape of contemporary conflict; notably, pro-Israeli media outlets have also been utilizing false information to shape public perspective.

Websites that once relied on human writers now embrace AI tools, allowing them to churn out vast amounts of content at an unprecedented speed and cost. This phenomenon has led to the emergence of hundreds of AI-powered sites masquerading as legitimate news outlets. The story of the Israeli Prime Minister’s psychiatrist serves as a glaring example of how AI-generated content infiltrates the online space, blurring the lines between fact and fiction.

These AI-generated news sites, including Global Village Space, a Pakistani digital outlet, play a pivotal role in disseminating such information. The analysis of the site’s content by NewsGuard reveals the extensive use of AI chatbots to generate content, leading to alarming similarities between the fictitious 2010 article and the recent surge in misinformation, yet, the technology for identifying AI misinformation has still not developed to the extent to be 100% authentic.

The alarming growth of AI-generated news outlets

The exponential rise of AI-generated news and information sources presents a unique challenge for discerning consumers. McKenzie Sadeghi, an analyst at NewsGuard, points out the dangers associated with these AI-powered platforms. The ease with which AI can replicate and disseminate misleading narratives raises concerns about their perceived legitimacy and trustworthiness among users.

The impact of AI-generated content extends beyond online platforms, reaching television shows in countries like Iran. The fabricated story about the Israeli psychiatrist found its way onto a television program, demonstrating the widespread influence and potential consequences of AI-generated misinformation. Despite being exposed, some sites, including Global Village Space, resort to relabeling their content as “satire,” further complicating the task of distinguishing fact from fiction.

Human intent vs AI – Who shapes truth?

As we navigate the murky waters of AI-generated news, one cannot help but question the broader implications for information integrity and public perception. The Israeli Prime Minister’s psychiatrist saga serves as a stark reminder of the challenges posed by the unchecked growth of AI-generated content.

In an era where misinformation spreads like wildfire, it is crucial to address the root causes and assess the impact on global narratives. Given that generating news on any AI platform usually requires some degree of human input in the form of a prompt, human intent cannot be overlooked or dismissed. The question still lingers: How can we safeguard the authenticity of information in the face of the growing influence of AI-generated stories, especially during critical moments in world affairs?