The robotics of tomorrow will possess senses comparable to their human counterparts. Already, there are AI algorithms that enable devices to see, smell, and hear. These enhancements leverage sensors and advanced algorithms to simulate these senses. However, one sense has eluded researchers to date: touch.

Now, a group of researchers from the Stevens Institute of Technology has revealed a novel method that emulates touch. Here’s what you need to know.

Researchers have long sought to emulate touch via robotic systems. A robot that could feel would open the door for many use-case scenarios. As more people begin to work alongside these devices, the interest in robots that can ‘feel’ continues to rise. Engineers believe this sense is crucial in improving efficiency, capabilities, and workplace safety.

Think of robots that could tell if they bump into you and react accordingly. This ability would allow robots that work closely with humans to reduce their risks and enable them to conduct more precise and previously human-only tasks.

Emulating Touch through Advanced AI Study

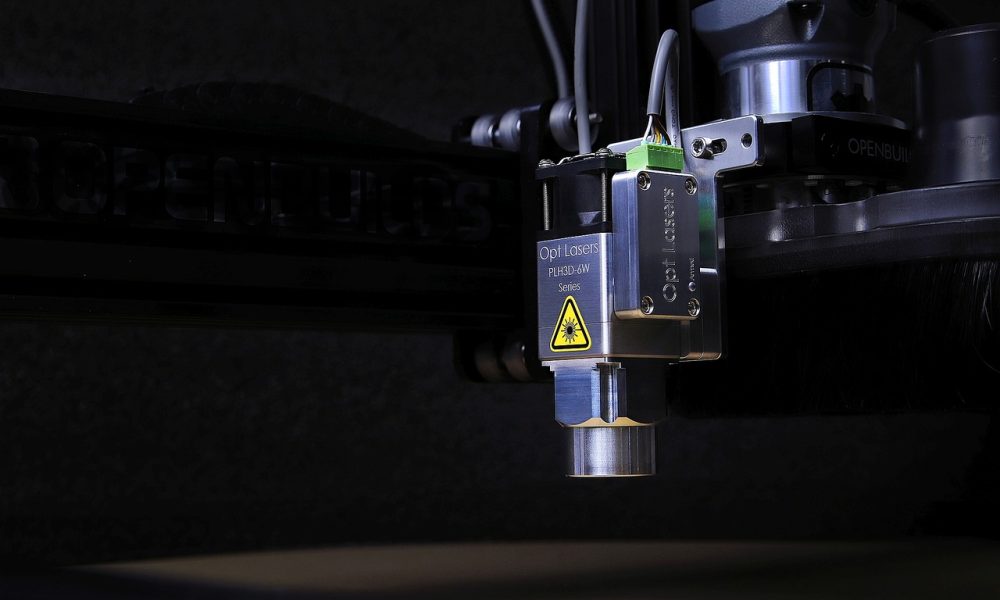

The study, published in the journal Applied Optics, reveals how researchers were able to simulate touch via lasers and an AI algorithm. The researchers combined AI and quantum technology to achieve this goal in a quantum lab setup.

Quantum interactions provide a plethora of data that can be used to create and improve AI models. This approach combines advanced machine learning, a raster scanning single photon LiDAR, and quantum feedback in the form of speckle noise to accomplish this task.

Emulating Touch Using photon-firing scanning laser

The team created a raster scanning single photon LiDAR that could be set up to pulse at different intervals, enabling engineers to document any changes and feedback reflected from the surface. Each surface has different refractions depending on its makeup. Researchers recognized this fact and determined this was the best way to artificially provide the system with the ability to quickly conduct topography scans.

Algorithmic AI models

The AI scans the surface using the proprietary laser as the first step. This maneuver creates a detailed image of the item. The detailed image will produce random feedback called speckle noise. In the past, speckle noise was a hindrance to optical clarity as this feedback lowered resolution.

The researchers noticed that this feedback was more than interference. It provided a unique signature for every surface based on its roughness. This data was fed to the AI system, which then deciphered the information to determine the structure’s dimensions, height, and roughness.

Source – Magicplan

The researchers utilized this setup to scan multiple types of surfaces to program the AI algorithm. Specifically, the system registered backscattered protons from different points across the surface. From there, the data is gathered and formed into a mode fiber that is counted via a single photon detector. This device can differentiate speckle noise from other interference, enabling researchers to leverage this interference to determine the object’s smoothness.

Emulating Touch Test

To test their theory, the team began by setting up a single pixel, raster scanning single photon counting LiDAR system. This device was optimal for the research as it can produce a collimated laser beam that can be fired in picosecond pulses, providing accurate coverage and responsiveness.

The engineers decided to utilize 31 industrial sandpapers as their test subjects. They started by acquiring all different varieties and roughness. Specifically, the sandpaper ranged from 1 to 100 microns thick. The laser then passed pulses through the transceiver and at the sandpaper. The light and interference were refracted back and calculated by the AI system.

Emulating Touch Study Results

The results of these tests show promise. The new system initially had an accuracy of 8 microns, which was reduced to 4 microns after fine-tuning and adjustments. Notably, this level of accuracy is comparable to the industry’s leading solutions.

Notably, the system appeared to work best when the surface had a fine grain rather than major roughness. Impressively, the engineers were able to accurately determine the surface structure of the sandpapers with minimal effort, opening the door for this technology to reshape how industries operate in the coming years.

Emulating Touch Study Benefits

This research could unlock many benefits across multiple industries. For one, this method would provide huge cost savings over the current systems in use. Additionally, it would allow manufacturers to reduce their employees as the AI is far more accurate. This maneuver would reduce overhead and improve their bottom line.

Fast Surface Mapping

The speed at which the system scans a surface is another advantage. This method only requires the laser to scan the surface for a few moments before the determination is made. As such, it’s much faster and requires less effort to utilize, allowing manufacturers to complete more scans and save.

Low-cost Integration

Another major bonus to this research is that it provides low-cost integration solutions into the market. In many instances, LiDAR is used to determine the structural integrity of core components. This new system could enhance the LiDAR in use and empower it to make measurements on a micron level.

Quality Control

The new form of surface scanning will improve quality control methods for intricate and exact components. Engineers have long used systems to ensure that vital components of aircraft and other items are free of defects that could potentially spiral into serious issues.

Enhanced Sorting

Robot sorters are already in high demand and in use across the globe. This upgrade could help these systems improve their capabilities by giving them an additional sense to utilize when determining the makeup and required sorting of a product. For example, a robot hand that could feel would be able to check produce for its firmness to determine if this was ripe or not.

Emulating Touch Researchers

Engineers from the Stevens Institute of Technology spearheaded the research into the laser topography system. They worked closely with CQSE Director Yuping Huang as part of their project. Additionally, Daniel Tafone and Luke McEvo received credit for their efforts on the project.

Potential Applications

There are a lot of applications for this technology. Already, LiDAR plays a vital role in safety standards. This research improves those capabilities significantly and enables engineers to conduct never-before-possible real-time monitoring of crucial components.

Healthcare

The healthcare industry has seen a growing demand for robots that can feel like humans. These systems could find multiple uses in the industry. One interesting use case would be allowing these devices to scan moles in search of fatal melanomas. The laser-based algorithm could determine the tiny differences that make one mole safe and the other potentially fatal, saving the lives of thousands of patients.

Enhance LiDAR

LiDAR is used in a variety of products today. Smart cars, robots, smartphones, and other products rely on LiDAR to act as their eyes. Even your robot vacuum includes some form of LiDAR to avoid obstacles. This new technology could help micro-robots navigate environments such as the human body and deliver life-saving treatment directly to the locations required.

Company that Can Benefit from this Research

Several robotics firms could integrate this technology and improve their results today. Robotics is a fast-growing sector that now spans nearly every industry. From conducting surgeries to picking fruit, these devices could see a major boost with the introduction of a touch emulator.

Samsara

Samsara (IOT +4.54%) is a San Francisco-based IoT firm that seeks to make waves. The company was founded by Sanjit Biswas and John Bicket in 2015 to create, manage, and offer enterprise clientele a solid feature to track and monitor logistics. Today, the company provides a wide range of products to accomplish this task, including AI dashcams, route optimization, equipment tracking, site monitoring, and telematics.

Samsara is a major player in the IoT (Internet of Things) market. IoT devices are the millions of smart devices you see in use today. They can be anything with an internet connection, sensor, and the ability to communicate data. Today, the IoT sector includes billions of smart devices globally.

Samsara Inc. (IOT +4.54%)

Samsara enables companies to integrate these devices into their logistics to improve results, efficiency, and security. IoT devices can be used to monitor products in real-time, including their condition, authenticity, location, and much more.

Analysts see Samsara as well positioned to see growth as the IoT industry expands. The company has a market cap of $30.433B and has backing from some of the biggest names in the industry. Notably, the stock was listed as one of Harvard University’s top stock picks this year, furthering consumer confidence.

Other Attempts to Emulate Human Senses

Examining the race to make robots feel reveals some interesting developments. The first thing you notice is that there are two very different approaches to making robots gain the sense of touch. Hardware solutions incorporate devices that can simulate touch by registering pressure and heart, whereas software solutions integrate algorithms that utilize feedback to simulate touch. Here are some other ways in which researchers have found to give robots the ability to feel.

High-Performance Ceramics

A recent study demonstrates how micro-ceramic particles could be embedded in a flexible skin-like layer to enable the device to register heat and pressure. The tiny ceramic particles provide the perfect way to transfer electrical pulses across a flexible surface.

This research saw engineers develop robot skin tags that could tell if you brushed against and pulled away. They then went on to create a smart prosthetic that could enable the user to feel surfaces and react accordingly. They noted that their robotic skin was capable of registering touch using these pulses down to the finest pressure settings.

Artificial Nerves

Another exciting breakthrough in the field of robotic touch occurred in October when a team of engineers from Stanford University’s Zhenan Bao Research Group succeeded in creating an artificial nerve. The man-made device was designed to operate just like its human counterpart in that it would allow robots to respond to touch effectively.

The system relies on an artificial nerve setup that can be broken into three components. The mechanoreceptors act as resistive pressure sensors. There are also organic ring oscillators, which function similarly to neurons, and organic electrochemical transistors that enable the entire system to operate.

Robot Sweater

Carnegie Mellon University introduced a Robot Sweater that could make industrial robots much safer. Notably, today’s safety systems often require rigid pieces added to robots. The problem with his approach is that robots can’t be fully covered as their moving parts need to remain flexible. This desire led researchers to consider a sweater-like fabric as the solution.

The Robot Sweater is a machine-knitted cover that can fit any 3-dimensional shape. As such it can be created to provide full protection for robots and their human co-workers. The device works by utilizing two layers of metallic fibers integrated into its surface. Whenever a human touches the sweater, it closes the circuit, notifying the robot of the incident and inciting a response.

Future of Emulating Touch Robots

Robots that can emulate touch are the future. These devices will open the door for more integration alongside human coworkers. This development will lead to robots that improve safety, save funding, and provide multiple industries with solutions to long-lasting problems. As such, demand for robots that can emulate touch is only going to increase over the coming years. For now, you should expect to see more robot coworkers in the coming months.

Learn about other cool robotics projects now.